A small fraction of internet users is responsible for the majority of toxic behavior online, according to new research examining harassment, abuse, and disruptive conduct across major digital platforms. The findings challenge the assumption that online toxicity is evenly distributed and instead point to a concentrated group that disproportionately shapes digital discourse.

The PNAS Nexus study, conducted by Stanford and collaborators, found that Americans substantially overestimate the prevalence of harmful content producers on social media.

Researchers reported that many users believed that nearly 40 percent of Reddit users had posted severely toxic comments, when in reality, “only 3 percent of active Reddit accounts had done so,” according to the study. This represented a 13-fold overestimation by participants. The paper concluded that “people do not realize that most harmful content on social media is produced by a small, prolific group of users” and that this misperception contributes to broad negative attitudes toward online communities.

This finding closely aligns with global academic research showing similar patterns across platforms. A longitudinal study of Twitter found that the top 1 percent of toxic profiles generated a structurally disproportionate amount of harmful content. Those highly toxic accounts frequently posted with little diversity of themes and often exhibited bot-like behavior, amplifying divisive content far beyond their numbers.

Additional studies reinforce this concentration phenomenon. Research on fear speech and online hate shows that even when toxic words are moderated, subtle content designed to incite anxiety or division spreads widely. It can be because it accumulates more engagement and followers than direct hate speech.

Experts emphasize the importance of distinguishing between the perception of toxicity and the actual distribution of harmful behavior. The PNAS Nexus authors noted that overestimating the prevalence of toxic users can lead people to feel pessimistic about social cohesion and the moral state of digital platforms, even when a large majority of users never participate in harmful discourse.

The dynamics of online toxicity have direct consequences for platforms and policy makers. Wikipedia defines online hate speech as content that targets individuals or groups based on protected characteristics, and social networks enable its rapid spread through their scale and speed.

Research also shows that as toxic content spreads, it drowns out neutral and constructive voices, creating an environment in which harmful posts appear more prevalent than they actually are.

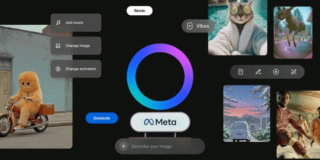

Platforms themselves have acknowledged these patterns. Meta’s Transparency Reports show that a small percentage of recurring accounts are responsible for a large proportion of policy violations, including hate speech and harassment. Likewise, Twitter (now X) compliance documents frequently highlight repeat offenders as primary drivers of harmful content clusters.

The study only took a sample size from the USA.