AI Privacy Risks You Can’t Ignore: The Hidden Cost of Smart Tools

From search engines and mobile apps to smart browsers and digital assistants, AI is everywhere—but the AI privacy risks associated with these tools are becoming impossible to ignore as they increasingly demand intrusive access to personal data.

Artificial intelligence is rapidly becoming integrated into every aspect of our digital lives. From phones and productivity tools to browsers and even fast-food drive-throughs, AI now plays a prominent role. But with this integration comes a growing concern: AI privacy risks. The convenience of AI-powered features is being matched—and often outweighed—by how aggressively these tools seek access to user data.

What once raised eyebrows, like a flashlight app requesting access to your contacts and location, is now echoed in AI apps asking for permissions to read your emails, download your calendar, and view your stored photos. These requests are often justified under the premise that such access is needed to “improve” your experience—but at what cost?

Comet Browser Highlights Alarming Data Access in AI Tools

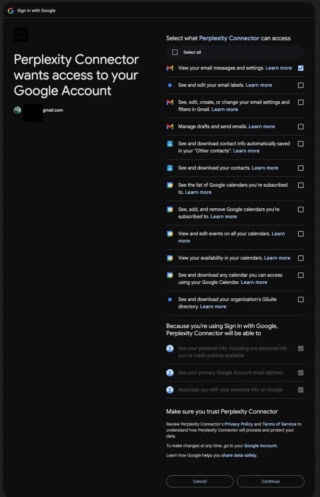

Take Perplexity’s latest AI-powered web browser, Comet, for example. The browser offers AI search and automates tasks like summarizing emails or checking calendars. But a recent review by TechCrunch revealed that Comet requests sweeping permissions when connecting to your Google Account. These include the ability to send emails, download contacts, manage events across all your calendars, and even copy your company’s employee directory.

Perplexity insists that much of the data remains stored locally, yet the permissions granted give the company access to sensitive information—potentially to train AI models used by others.

This isn’t an isolated case. Other AI apps, such as call transcribers and meeting assistants, also demand access to real-time conversations, personal calendars, contacts, and more. Even Meta has been testing AI-powered features that scan the user’s photo library—photos that haven’t even been uploaded yet.

Signal president Meredith Whittaker compared AI agents to “putting your brain in a jar,” citing how some AI products request browser access to make bookings, use stored credit card information, access contact lists, and read browser history and saved passwords. The promise of effortless task automation often masks the disturbing level of access these apps require.

Granting AI Permission to Act Raises Trust and Accuracy Concerns

The AI privacy risks don’t end with access alone. In many cases, AI agents are granted permission to act on your behalf. This demands a dangerous level of trust—not just in the AI’s accuracy, which is still inconsistent—but also in the companies building and profiting from these systems.

When things go wrong, it’s standard practice for engineers or human reviewers at AI companies to analyze your private queries to troubleshoot errors. In other words, your personal data may be seen, stored, or even used to improve future AI performance.

The result? A cost-benefit analysis that heavily skews toward risk. While AI may promise to save a few minutes of your time, the trade-off often involves handing over unrestricted access to your inbox, messages, calendar history, and more—data that could span years.

For anyone concerned about digital security and privacy, granting this level of access should raise immediate red flags. These permissions are reminiscent of early warning signs when apps overreached, and users rightly questioned their motives. The same scrutiny should now apply to AI tools.

Before giving AI apps access to your most personal information, consider what you’re really getting in return. Is the convenience of automated task management truly worth the potential consequences of surrendering your digital identity?

Manik Aftab is a writer for TechJuice, focusing on the intersections of education, finance, and broader social developments. He analyzes how technology is reshaping these critical sectors across Pakistan.

3 min read

3 min read