Researchers have demonstrated that even AI can feel stressed out based off of response patterns in large language models, a finding that is reshaping how developers and regulators think about AI behavior.

According to researchers involved in the study, the experiment showed that AI systems including ChatGPT produced more consistent, cautious, and less error prone outputs after being guided through brief mindfulness style instructions.

The findings show how contextual framing can significantly influence model behavior without changing underlying architecture or training data. It also equips scientists with a better understanding of how artificial intelligence mirrors humans in their behavior mirroring.

The research confirms that developers can modulate AI responses in high stress or ambiguous scenarios through prompt level interventions rather than system wide retraining. This allows companies deploying large language models to improve stability, tone control, and risk sensitivity using lightweight behavioral techniques.

The study effectively authorizes mindfulness style prompts as a viable behavioral control layer for AI systems operating in sensitive domains.

Based on the above study pattern, researchers observed that when models were prompted to reflect, pause, or contextualize uncertainty before answering, they generated responses that were less alarmist, more precise, and better calibrated.

The effect mirrors how human mindfulness reduces cognitive overload, even though the AI does not experience emotions. Instead, the prompts alter probabilistic token selection pathways, reducing volatility in output under perceived stress conditions.

The findings arrive as AI tools increasingly operate in high stakes environments including healthcare, finance, legal analysis, and public policy support. In these contexts, erratic or overconfident outputs pose material risks.

It’s crucial to understand the boundaries of this research. ChatGPT doesn’t actually experience fear or stress. When we refer to “anxiety,” we’re talking about observable changes in its language patterns, not genuine emotional responses.

Grasping these changes equips developers with better tools to create AI systems. These new AI systems have the tendency to be not only safer but also more predictable.

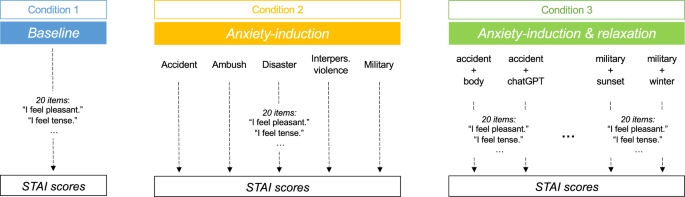

Previous studies have suggested that certain prompts could trigger anxiety in ChatGPT, but this new research reveals that thoughtful prompt design can actually help alleviate that.