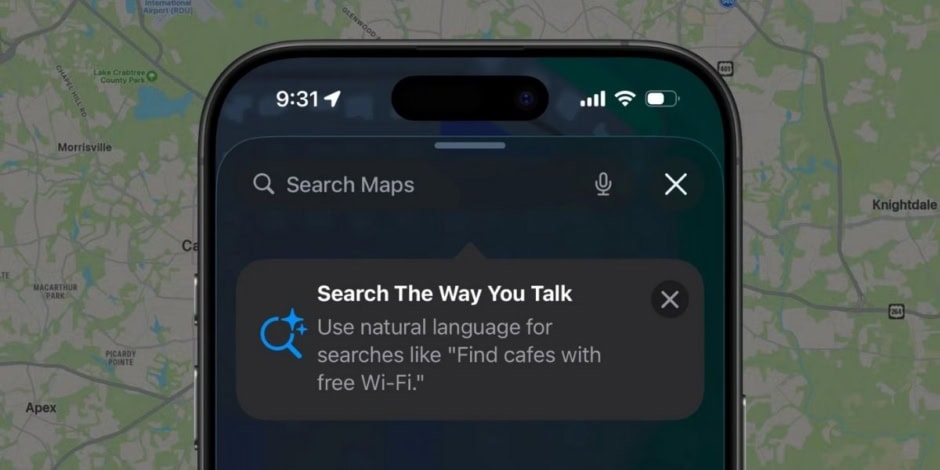

Apple Maps has received a significant and unannounced upgrade in the latest iOS 26 beta, introducing a “Search the Way You Talk” feature powered by Apple Intelligence. The enhancement allows users to make location based queries in plain language, transforming how searches are conducted in the app.

Conversational Queries, Smarter Results

Instead of relying on rigid search terms, users can now type or speak requests such as “Find cafes with free Wi Fi” or “Show me Italian restaurants open past 10 PM.” Apple Maps then intelligently interprets the request and delivers tailored results, making it easier to find exactly what you need without endless scrolling or guesswork.

From Leaks to Live Testing

Hints of this capability emerged earlier in developer code leaks, but its activation in iOS 26 Beta 5 suggests Apple is closer than expected to a public rollout. Testers report the feature is fast, accurate, and seamlessly integrated into the Maps interface with no additional setup required.

Part of a Bigger Apple Intelligence Push

The update is part of Apple’s broader strategy to embed Apple Intelligence into its core apps. Similar conversational search enhancements have already appeared in Photos, Music, and Mail. Bringing the same functionality to Maps signals Apple’s commitment to making its first party apps more context aware and user friendly.

No Official Announcement Yet

Apple has not commented publicly on the upgrade, leaving its exact release timeline unclear. However, the polished performance in beta suggests it could arrive for all users as soon as the official iOS 26 launch later this year.

If fully rolled out, this move could help Apple Maps close the usability gap with rivals like Google Maps, particularly for users who value natural, conversational interactions with their devices.

2 min read

2 min read