Google just shifted the power balance in the AI industry. The tech giant officially launched Gemini 3 Flash. Consequently, this fast and cost-effective model is now the default engine for the Gemini app and AI-powered search.

Google is clearly looking to steal OpenAI’s thunder. This release comes only six months after the debut of Gemini 2.5 Flash. However, the performance leap is massive. Gemini 3 Flash does more than just replace its predecessor… It actively challenges frontier models like GPT-5.2.

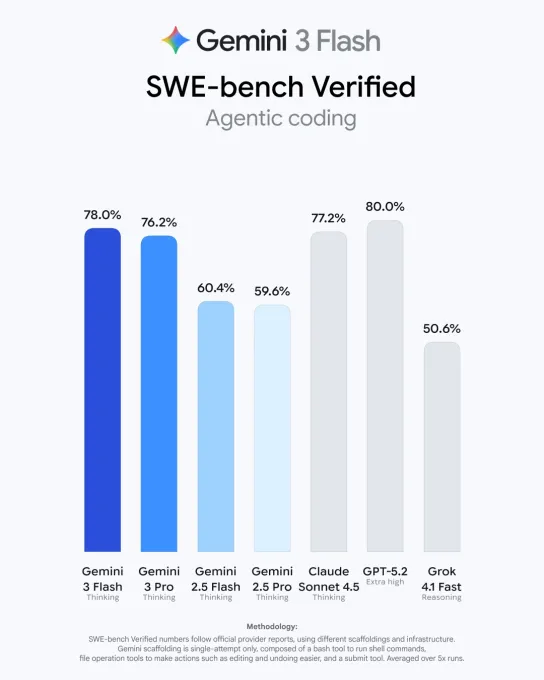

Crushing the Benchmarks

The numbers do not lie. Gemini 3 Flash delivered a 33.7% score on the “Humanity’s Last Exam” benchmark without using tools. For comparison, the newly released GPT-5.2 scored 34.5%. This means a “Flash” model is now providing near-pro-level expertise.

Furthermore, the model dominated the MMMU-Pro multimodality and reasoning benchmark. It secured an 81.2% score, outperforming every single competitor in the field. Regarding coding, the Gemini 3 Pro version hit 78% on the SWE-bench verified benchmark. While GPT-5.2 leads slightly at 80%, Gemini 3 Flash remains a top-tier choice for agentic coding.

Gemini 3 Flash: 3x Faster & More Efficient

Speed is the defining feature of this release. Gemini 3 Flash is three times faster than Gemini 2.5 Pro. Moreover, it is significantly smarter about how it processes information. For complex “thinking tasks”, the model uses 30% fewer tokens on average than the 2.5 Pro version.

The pricing reflects this efficiency. Google set the price at $0.50 per 1 million input tokens and $3.00 per 1 million output tokens. Although this is slightly higher than the previous Flash model, the total cost often drops. Because the model uses fewer tokens to reach an answer, users save money on bulk tasks. In fact, data shows the median output for Gemini 3 Flash is only 1,239 tokens. This is far more efficient than Gemini 3 Pro’s 1,788 tokens.

The “Code Red” at OpenAI

This launch is already causing panic in the industry. Reports indicate that Sam Altman recently sent an internal “Code Red” memo to the OpenAI team. This move followed a dip in ChatGPT traffic as Google’s consumer market share rose. Currently, Google processes over 1 trillion tokens per day through its API.

Google is positioning Flash as the ultimate “workhorse” model. It handles video analysis, data extraction, and visual Q&A with ease. For example, users can now upload pickleball videos for tips or have the model guess sketches in real-time. Developers can access the model now through Vertex AI, Gemini Enterprise, and Google’s new Antigravity coding tool.

The era of slow, expensive AI is ending. Google is proving that speed and intelligence can finally exist in the same package.

3 min read

3 min read