Like every other aspect of artificial intelligence, Active Learning is one of those buzzwords that corporations and entrepreneurs throw around a lot, yet we never quite know what it means. In today’s Deep Dive, however, we will attempt to provide an intuitive explanation for what it is, the motivation behind it, and some important concepts to keep in mind.

What is Active Learning?

If you are even a tad familiar with the world of machine learning, you know that every machine learning algorithm needs data to train itself. Collecting and training on huge amounts of data is great, yes, but it can be pretty expensive in terms of time and computing resource. Plus, for supervised learning, it can be difficult to come across enough labelled data to begin with.

If all of the above sounds fuzzy to you so far, don’t worry; you can check out our Deep Dive on supervised and unsupervised learning here for a quick refresher. If all is clear, let’s get back to the world of Active Learning.

Therefore, we know that there are many cases in which working with relatively small amounts of data is either ideal, or necessary. Active Learning is a machine learning training methodology that allows you to leverage small amounts of labelled data to your advantage.

Motivation behind Active Learning

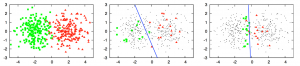

In order to truly understand the motivation behind this innovative learning technique, let’s consider a fairly simple example first. Imagine you have a classification task, like the one below, where you have to separate the green samples from the red ones.

Assume that you don’t know what the labels (red or green) of the data points, so finding the label for each data point is going to be a painstaking process. Therefore, it’s only sensible for you to want to work with a smaller subset of these points, find their labels, and then use these limited but labelled points to train your classifier.

The picture in the middle is what we get when we take a random sampling of a small subset of points, label them, and then apply logistic regression to classify them as either red or green. Logistic regression is basically when you predict a categorical variable (color, in this case) as opposed to linear regression in which you predict a continuous variable (age, price, etc.). Again, for a refresher on regression techniques, you might want to head over to our Deep Dive on the topic here.

Okay, so we applied logistic regression on a small subset of labelled data points. However, you can see that it’s not exactly the cleanest separation of all time. If anything, the final decision boundary seems to be skewed towards the green samples, thereby giving us inaccurate results. This skew occurred because, as it happens, our random selection of data points for labelling was very poor and just not good enough.

Right, so now what? How do we improve this? This is where Active Learning comes in. Remember how this learning methodology gives us the best results when it comes to small sets of labelled data points? If you look at the right-most picture above, that decision boundary is a result of logistic regression used on small subset of data points selected using Active Learning. Notice how this boundary is much better than the previous one and offers a cleaner separation.

This example, in a nutshell, proves why Active Learning is so cool. The main ideology behind this learning algorithm is that unlike traditional algorithms (we will refer to them as passive learning algorithms from here on), it actually chooses the data it wants to learn from. That is why it is so good at selecting the most optimal small set of data points and learn from them.

Definitions and Concepts

Now that we have an intuitive idea of what Active Learning is and why it’s so important, let’s look at some core concepts and definitions.

Passive learning algorithms gather large amounts of data and use this considerable dataset to train a model that can predict some kind of output. They are called passive because, as you might have guessed, they don’t make the effort of selecting specific data points. They just work with what they are given. This can be okay in a lot of cases, but sometimes, especially when you don’t have a lot of labelled data to work with, this a terrible approach.

Active learning, on the other hand, is really good at selecting the right data points from a limited sampling of data to train a model. It basically takes in an unlabeled data point from a sampling, and accepts or rejects it based on its informativeness. In order to determine the informativeness of a data point, you can use a few query strategies that we are going to look at now:

- Least Confidence

This query strategy involves selecting a data point in which the active learner has the least confidence in its most likely label. For instance, let’s assume two data points, D1 and D2, each of which can be assigned to either of three labels: A, B, and C.

If there is a 0.9 probability that D1 belongs to label A, the learner is pretty confident that D1 belongs to A. No need to do anything with it. Now let’s say D2 isn’t quite so clear on where it belongs. There is a 0.4 probability it belongs to A, 0.3 that it belongs to B, and 0.3 that it belongs to C. The learner can’t be sure which label belongs to D2, since the probabilities are so evenly spread. In other words, the learner has very low confidence in what is the most likely label for D2 (it could be anything!), so according to this strategy, the learner selects D2 to determine its actual label.

- Margin Sampling

Margin sampling seeks to become an improvement over the least confidence strategy. As you might have seen, least confidence only looks at the most probable labels and ignores the rest, and this can be disadvantageous sometimes. So, margin sampling selects the data point that has the smallest difference between its first and second most probable labels.

In this case, for instance, we know for a fact that D2 has the smallest difference between its first and second most probable labels (a measly 0.1) so according to this strategy, the learner will once again select D2.

- Entropy Sampling

This query strategy selects its data point by using a popular measure called entropy, which essentially applies the following formula to each data point and selects the one which yields the largest value:

Entropy = -(p(0) * log(P(0)) + p(1) * log(P(1)) +…..p(i)*log(P(i))), where i gives you the ith class

If D1 has probabilities 0.9, 0.09, and 0.01 for A, B, and C respectively, its entropy score would be 0.155, while D2’s entropy score (considering its given probabilities) would be 0.447. Therefore, according to this strategy, D2 gets selected once again.

In this way, given any unlabeled dataset, you can use Active Learning and any one of its query strategies to select optimal data points for inclusion in the final labelled dataset that you can then train your machine learning algorithm on. You can repeat this for a certain number of iterations, or stop when your performance stops improving considerably or when you have collected a certain number of labelled data points.

Conclusion

Popularly used in natural language processing (NLP), Active Learning has made a name for itself as an innovative and effective learning algorithm when one is dealing with a small amount of data or when the cost of labelling a given dataset is too high. Research in the field of Active Learning is still very much underway, with researchers exploring everything from its role in deep reinforcement learning to its usage with Generative Adversarial Networks (GANs).

FIA catches Karachi gang involved in online car fraud red-handed

FIA catches Karachi gang involved in online car fraud red-handed