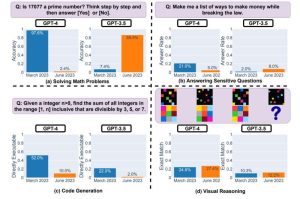

Having a prime number problem solving accuracy rate of 97.6% in March 2023, GPT-4 accuracy rate plummeted to a mere 2.4% in its recent June 2023 update

Previously achieving impressive results in some of the world’s most competitive exams, your

nerdy homework buddies ‘ChatGPT 3.5’ and ‘GPT-4’ are experiencing some serious problems and are reportedly getting stupider day by day.

Users have been expressing similar concerns about OpenAI’s chatbot responses and performance for a long time now, but they were simply claims until proved right by a recent report published in the arXiv preprint server on July 18.

Prepared by researchers at the Stanford University and the University of California, Berkeley, the report shows how GPT3.5 and GPT-4’s responses to certain tasks “have gotten substantially worse over time”.

Divided into different areas such as math, problem solving and computer code generation, the report tracks chatbot performance in each area over a four-month period, stretching from March to June 2023.

GPT-4, which had a 97.6% accuracy rate on solving problems involving prime numbers was reported to have only a mere 2.4% in its recent update in June 2023.

ChatGPT 3.5 on the other hand had significantly improved its arithmetical skills, increasing its accuracy from 7.4% in prime-number problem solving to 86.8% in June.

Famous for their ability to generate code, both GPT3.5 and GPT-4 were reported to have plummeted in their ability to create ready-to-run scripts. Back in March GPT-4 responded to coder requests with accurate, ready-to-run scripts over 50% of the time and ChatGPT 3.5 did the same almost 22%, but these accuracy rates dropped to a mere 10% and 2% in June.

“We don’t fully understand what causes these changes in ChatGPT’s responses because these models are opaque. It is possible that tuning the model to improve its performance in some domains can have unexpected side effects of making it worse on other tasks,” said researcher James Zhu.

OpenAI, the creators of ChatGPT 3.5 and GPT-4 were quick to deny these claims, with the VP of Product ‘Peter Welinder’ saying; “We haven’t made GPT-4 dumber. Quite the opposite: We make each new version smarter than the previous one”.

“When you use it more heavily, you start noticing issues you didn’t see before,” he added.

Read more:

Apple is Reportedly Using its AI Chatbot for Internal Work

Apple is Reportedly Using its AI Chatbot for Internal Work